Translating Thoughts Into Words: Advances In Brain-Machine Communication

(Posted on Wednesday, August 16, 2023)

This article was originally published on Forbes on 8/16/23.

This story is part of a series on the current progression in Regenerative Medicine. This piece discusses advances in brain-computer interfaces.

In 1999, I defined regenerative medicine as the collection of interventions that restore to normal function tissues and organs that have been damaged by disease, injured by trauma, or worn by time. I include a full spectrum of chemical, gene, and protein-based medicines, cell-based therapies, and biomechanical interventions that achieve that goal.

A new brain-computer interface decoder enables researchers to construct continuous language using only MRI brain scans. To speak, electrical signals are sent from the brain to the lungs, which push air through the trachea and larynx to produce sound, which we can modify using our tongue and lips to create language. Dr. Jerry Tang and colleagues from the University of Texas at Austin recently described a system to create language using only brain activity in Nature. The decoder uses fMRI brain scans to determine words being imagined and reproduces those words in an electronic format, which could aid nonverbal communication in the future. Here we analyze their findings and discuss implications for brain-machine communication.

When we think of words or phrases in our heads, similar areas of the brain that control speech are activated. Using fMRI scans of individuals while reading and later thinking, the researchers trained the decoding software to map each individual’s speech and thought patterns, enabling it to recognize brain signals and translate them into words or phrases. They had the individuals read for 16 hours, with the decoder mapping each brain image and associating it with a word or phrase.

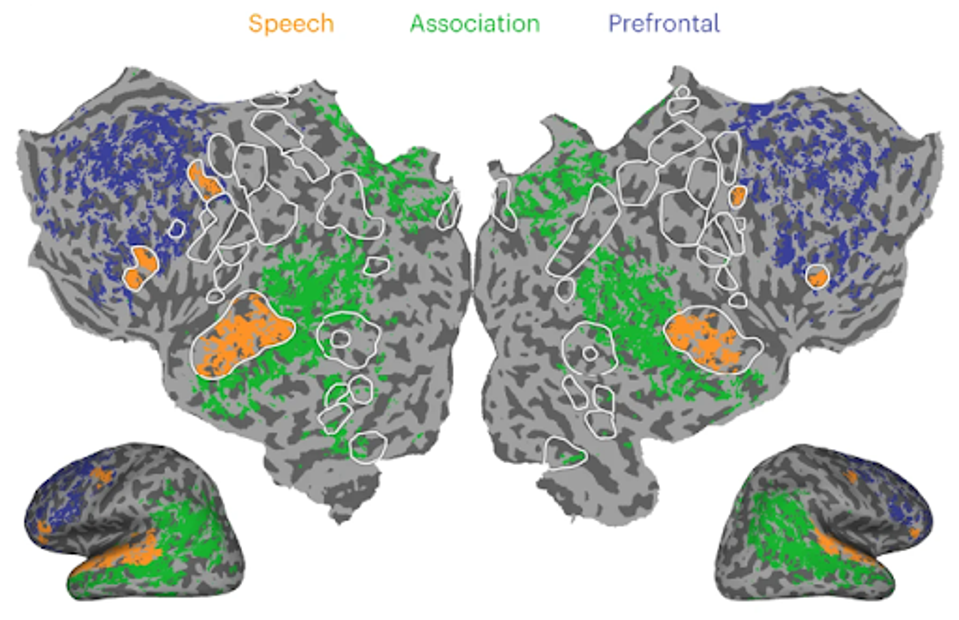

The map included three subsets of the activated brain: speech, association, and prefrontal. The difficulty faced was that each region, when analyzed by the decoder, would produce a different word sequence. Tang and colleagues suggest this is because there are many more words than possible brain images, leading to the decoder giving its best estimation from the 16-hour reading dataset.

FIGURE 1: Cortical regions for one subject. Brain data used for decoding (colored regions) were partitioned into the speech network, the parietal-temporal-occipital association region and the prefrontal cortex (PFC) region.

For example, the following phrase was prompted to the decoder: “I drew out this map for you and you’re really you’re like a mile and a half from home.” The left prefrontal cortex produces the following sequence, “the number on a map and find out how far they had to drive to reach the address,” whereas the right prefrontal cortex produces “just to see how long it takes so I drove down the hill and over to the bank.”

These are three completely different phrases, but all discuss similar ideas, such as distances and maps. The researchers used a number of methods to grade the translated texts. I find the BERTScore most intriguing. This method quantifies the shared meaning of two sequences. The researchers discovered quickly that exact translations were uncommon, so they aimed to see if the decoder could relay the core meaning of the sentence at the very least. Notably, between 72 and 82% of sequences were highly scored by BERTScore, meaning most of the time, the decoder did well conveying the meaning behind the intended thought.

In addition to imagined speech applications for nonverbal communication, this system could be used for a number of therapeutic, scientific, or commercial purposes.

My first and foremost concern: the decoder is not a self-contained device, but rather a software. It requires fMRI scans from a several hundred thousand dollar machine. In other words, the decoder is far from commercially available. The researchers did find that portable systems, such as functional near-infrared spectroscopy, could be used to adapt the decoder to a more useful form function for the individual, but time will tell if the system becomes portable or affordable enough to be used by the average person.

However, I am optimistic that this system could pave the way for similar technology to fill the void of brain-computer interfaces for nonverbal individuals. I recently discussed an in-ear bioelectronic that conveys brain signals to computers via a removable electronic earpiece. Perhaps the decoder software could be used in a similar device geared toward nonverbal people.

Another area of concern that came to mind was that of privacy, though this is addressed by the researchers. If my thoughts are read by the decoding device, could those thoughts be recorded if I was unwilling or unaware? The answer is a resounding no. Dr. Tang and colleagues tested whether an untrained decoder or a decoder trained for another individual could be used on a novel individual, which it could not. They also tested whether the decoder could analyze thoughts the participant was not trying to convey, which it also could not.

Ultimately, this is more of a fascinating novel technology than a breakthrough brain-machine interface device. I look forward to seeing how the decoder software is used in the coming years, perhaps integrated into other systems to create a useful product for the average person.